In a now classic paper, Blakemore and Cooper (1970) showed that if a newborn cat is deprived of experiences with horizontal lines (i.e., is raised in an environment that is without horizontal stripes), it will fail to develop neurons in visual areas that are sensitive to horizontal edges. If the cat is exposed to horizontal lines while the visual areas are still optimally plastic (when the effects of learning and entrenchment have yet to set in), some neurons will quickly become selective to the feature, firing reliably when horizontal lines are part of the incoming sensation. These neurons are often referred to as ‘feature detectors’ even though the actual detection of the feature is always a network effect, that is, not the result of an isolated neuron firing, leading some to use the term tuned filters instead (see, e.g., Clark 1997).

It is well-known that our ability to categorize depends on our experiences with the objects of such categorization; moreover, research keeps finding that this phenomenon has more than a mere neurological ‘substrate’, that it permeates the very fabric of the brain (Abel et al. 1995, Sitnikova et al. 2006, Doursat & Petitot 2005). The study of this fact, however, proves very difficult for ethical reasons. Ideally, neuroscientists would experiment with children in order to see how, for example, sensory distinctions or, better yet, abstract concepts are acquired and represented. But the best means of accessing such precise data are ethically inconceivable.

To name one of the best methods currently on the market, in an ongoing project at Stanford University School of Medicine that aims to study the formation and entrenchment of sensory distinctions, Niell and Smith (2005) have recently been able to study the development, in real-time, of whole populations of neurons and their connections straight from the retina to brain regions known to process visual information. The method consists of immobilizing the growing subject and effecting two-photon imaging of neurons loaded with a fluorescent calcium indicator while experimenters control for the stimulus in order to better understand the electrochemical activity. Now remember, the aim of their efforts is to study the development of the neural connectivity and sensory capacity, which means subjecting the organism to this method for extended periods of times. Obviously, you can’t do this with children, so they are doing it with zebrafish, but the procedure promises to dazzle and reveal a lot about how sensory distinctions become entrenched in neural networks as a result of experience.

For the first time, it is possible to see how populations of neurons respond selectively to certain types of features, such as movement direction or size, and see to what extent, if any, there are innate representational constraints, such as the triggering of unlearned appearance concepts (Fodor 1998). As you probably guessed, current evidence seems to back up the claim that there are no innate representations,that they are learned from experience. The following are 6 reasons to believe that there are no pieces of knowledge or ideas that are unlearned.

1. Universal Approximators

Most complex neural networks are Universal Approximators because they can approximate any continuous function in their environment given enough time (Hornik, Stinchcombe & White 1989 or see, e.g., Zhang, Stanley & Smith 2004, Elman et al. 1996).[1] The Universal Approximator description applies to 3-layer neural networks, and obviously to those networks with a higher degree of complexity.

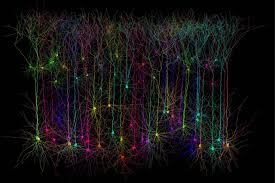

The human cerebral cortex is composed of innumerable overlapping 6-layer networks and each neuron can have up to 10,000 connections (see Damasio 1994, for a leisurely review). Moreover, there are many 3-layer networks in subcortical structures, as well as unlayered networks which consist of nucleuses of neurons and can provide added plasticity to an already elastic arrangement.

Universal approximators make excellent blank slates. In this respect, the interesting thing to notice is that human brains approximate the functions that they do and not others because of the characteristics of their bodies and the way they afford interaction with the world. In this way, the interaction between body and world conform the environment, a set of “time-varying stochastic function[s] over a space of input units”, according to Rumelhart (1989), which the brain must approximate.

You can learn anything, relatively quickly too. But, on the flip side, you are also likely to become what you surround yourself with. If you are surrounded by a bunch of idiots, well, sooner or later...

2. Neural Representations Mirror the World

Neural representation is symbolic but not as arbitrary as linguistic symbolization. Since neural networks are sensitive to the analog aspects of environmental functions through inductive and associative means, the internal code mirrors real-world structure in many ways, linking to what is represented through learning processes that involve neural competition that lead to self-organization and self-organizing maps (Kohonen & Hari 1999, Beatty 2001).

The structure of the mental representations arises out of the structure of what is represented (Damasio et al. 2004, Dehaene et al. 2005, Elman 2004) and what is done with that therein represented (Goswami & Ziegler 2006, Churchland & Churchland 2002, P.S. Churchland 2002). Content is everything, and that information isn't linked through logic.

3. Go Ahead and Kill a Few Braincells: Neurogenesis

We all grew up being told that we ought not to drink because it kills braincells, and braincells don't come back. Well, they do... every day. You know what actually kills them? Other brain cells because you didn't learn anything today. Yes, that's right. Since new neurons consume energy and resources, other braincells will kill them if they don't have to accept them into already existing neural networks. (Corty & Freeman 2013)

Contrary to the long-held scientific dogma, there is widespread neurogenesis throughout the lifespan (Taupin 2006, Zhao et al. 2003, Gould et al. 1999). That argument in favor of ingrained pieces of knowledge went bust in 1998. However, the survival of new neurons depends on their becoming integrated into existing networks (Tashiro et al. 2006, So et al. 2006), which in turn depends on some degree on the richness and variety of the perceived environment. To say it another way, the more varied your life is, the stronger your brain will be.

Nevertheless, some networks are more entrenched than others because some processing domains are very rigidly articulated (e.g., sensory modalities, like vision, where a network’s expansion could come at the unthinkable cost of losing reliability). Other processing domains admit more flexibility and open-endedness, like language processing or memorizing your new favorite songs.

So what does kill braincells? Living a monotonous life, like that of a homeowner who day by day goes through some mindless routine. In an ironic twist of fate, that person that was telling you not to kill your braincells was probably killing way more braincells than you were by refusing to live beyond his or her routine (see, e.g., "Environmental enrichment promotes neurogenesis and changes the extracellular concentrations of glutamate and GABA in the hippocampus of aged rats" by Segovia et al. 2006).

4. The Nature of Ideas

Neural representations are function (i.e., action) specific. Knowledge gained through one action that is useful for a different, supplanting action does not transfer ‘free of charge’, so to speak (see, e.g., Thelen and Smith 1994). However, neural networks bootstrap one another towards the approximation of ever more complex functions, conforming emergent properties, whereby associations between committed functional webs (called modules in scientific circles) lead to new functional webs that subsume the previous ones.[2]

As previous action representations are co-opted instead of supplanted, the new functional web inherits the representations of its onstituent functional webs insofar as these representations become associated. But this does not occur because they transfer the information, rather because the neural networks learn to behave in concert, in tandem, for your greater good.

For 60 years, good old fashioned cognitive scientists have wanted to convince the world that we are born with some ideas (a.k.a. Classical Cognitive Architecture), based on the ideas of Kant, Descartes and Plato. Even now, the television blurs commercials about how your genetics cause this psychological disorder or that one, so take a pill for that chemical imbalance. Their assumption is that the chemical imbalance causes the psychological issue. They are wrong, and a new generation of cognitive scientists is just waiting for them to die out so that the next paradigm, dynamical systems, can take over, this time based on evidence instead of on theoretical assumptions and wishful thinking. Though it has been an uphill battle, the dynamical systems perspective of mind is certainly taking over.

The chemical unbalance doesn't cause the psychological disorder; it is the psychological disorder. Your mind isn't some byproduct of your nervous system. Your mind is your nervous system; hence, it processes information in the same way. Mind and body are one.

5. Artificial Neural Networks that Organize Themselves:

An example

Superimposed artificial self-organizing networks with recurrent connections (Kohonen 2006) and newly developed genetic algorithms that permit the neuron to grow, shrink, rotate, and reproduce or absorb another neuron (Ohtani et al. 2000), are bringing about an artificial medium capable of transparently exploring many computational issues that cannot be studied as precisely with biological brains.

These models do away with innate representations altogether. You can't "program" information into them; you literally have to raise them by giving them an environment fitting to what it is you actually want them to learn and do.

The following is an example developed at the Helsinki University of Technology. An artificial neural network called a Self-Organizing Map was trained by feeding it 39 types of measurements of quality of life factors, like access and quality of education and healthcare, nutrition, among many others. All the data used was provided by the World Bank. The following image is the map produced by the network.

For the benefit of our understanding, this very same map was then depicted as the world map below. My guess is that it wont take you long to figure out what colors represent a higher degree of poverty if you compare the image above to the one below.

6. How You Know What You Know

The moral of the story seems to be that neural networks have more plasticity than plastic. For example, if the visual cortex is damaged at birth, large, medium and small scale characteristics of the functional organization of normal visual cortexes appear in the auditory cortex of the damaged brain, as other functional webs specialize in the functions typically located in the visual cortex (Sharma, Angelucci & Sur 2000, Roe et al. 1990). This same effect can be replicated by surgically redirecting the optic nerves, which suggests that there is nothing special about the networks of the visual cortex or of any piece of cortex at all. So please, I beg of you, stop believing the hype that everything is genetic.

Findings like these led to the Neuronal Empiricism Hypothesis (Beatty 2001), which states that the whole of the cerebral cortex is just one large yet segmented unsupervised, knowledge-seeking, self-organizing neural network. But neural empiricism is characteristic not just of the 6-layer networks of the cerebral cortex; though these add vast computational power to the brain, neural organization as a function of experience is the rule rather than the exception even in ‘lower’ structures.

Krishnan et al. (2005), for example, show that language experience influences sensitivity to pitch in populations of neurons of the brainstem. It’s not only the 6-layer networks that just don’t need innate representations; embodied neural networks get their representational constraints for free, from the body in the world.

Early on in our development, sensations establish further yet lasting symbols in the mind, what Barsalou (1993) calls perceptual symbols. John Locke and David Hume called them simply ideas. Today, in scientific circles, these are commonly referred to as mental representations.

There is an important difference, however, between Barsalou’s account and Hume’s, mainly that in the latter ideas are construed as less lively yet still complete copies of sensory impressions and in the former the copies are only schematic. This difference merits being highlighted as it is easily overlooked, specifically because it concerns a not-oft observed difference between primarily inductive and primarily associative learning.

Given that Hume construed the emergence of ideas as he did, his tabula is a warehouse of countless sequences of images, smells, tastes, textures, emotions, in short all the objects the mind has ever had sensations of. In contrast, because schematic records are by definition abstractions, associations between properties if you will, Barsalou’s cognitive architecture may be construed as furnishing the mind firstly with analog approximations of continuous and co-occurring properties that have been experienced, approximations that can later be used through association to fill in the blanks of particular schematic representations.

This is why murder trials can no longer be had in the United States based on a single eye-witness testimony. It is also why, when a person is placed in front of a police lineup to identify the perpetrator, the police have to say, by law, something to the effect of "remember, the person that you are trying to identify may not be in the lineup". If the police do not say that, any identification becomes inadmissible in a court of law. Why? Because your memories are reconstructions through and through, and by not saying that they are inducing the person to create a false memory.

Differently stated, while it follows from Hume's theory that baby and toddler minds record the totality of experienced events — a view followed later by Sigmund Freud — only to abstract or induce (and later associate) recurrent properties from the set, contemporary findings indicate that, first and foremost, minds approximate the properties themselves, such as shapes and colors. It is only later that these approximations can be employed towards the conformation of memories of concrete sequences of images, sounds, tastes, textures, smells, and combinations thereof.

Recall is surprisingly reconstructive. The resulting view is of a mind populated - not by countless sensory impressions but - by auto-organizing approximations of sensed properties.

During the first months of life, uncommitted neural networks in the infant’s brain approximate in an associative manner the functions of color, form, movement, depth, texture, temperature, pitch, among many, many others. In so doing, the brain develops its own personal neural code, a code that is conjunctively contoured by the processing mechanism, by the individual’s experience, and by the characteristics of the input domains. As these approximations — these ideas — are established, they mediate the processing of incoming sensations. Perception emerges as the real-time process of mediation, as the integration of fading sensations with enduring mental representations.

You know what you know because you are one big ball of perception flowing through your own web of ideas. And you act how you act because you've been conditioned to smithereens.

Open your eyes and do things differently. Go live outside your routine. You'll be happier and healthier as a result.

[1] Sometimes it is claimed

that 3-layer, feedforward neural networks are not real universal approximators,

as these can only approximate problem domains that have graded structure. While it an open question whether more

complex networks (e.g., 6-layer recurrent networks) are able to reliably approximate

non-graded problem domains, it should be recognized that the immense majority

of problem domains have graded structure, as practically all natural variables

have graded structure. The domain of

morphology is a prime example.

Generative linguistics traditionally applied a rule-governed (non-graded)

approach to this domain; however, current evidence indicates that the

morphological domain has gradient structure (Hay & Baayen 2005), and thus

can be reliably approximated by 3-layer networks.

[2] Properties that result

from a network’s functioning as a whole, i.e., that do not result from the

activation of a single neuron, are known as emergent properties. Neural codes are network specific and emerge

from the interaction of the implicated neurons responding to the body in the

world.

---------

If you enjoyed this article, you may also like: